User Tools

Sidebar

Table of Contents

HPC Project 1 - Server Maintenance & Upgrade

Scope:

To further understand the inner workings of an enterprise-class server, clean it's internals to lengthen it's lifetime, and upgrade the network adapters to allow for more bandwidth to and from the system.

Step 1: Safe Shutdown

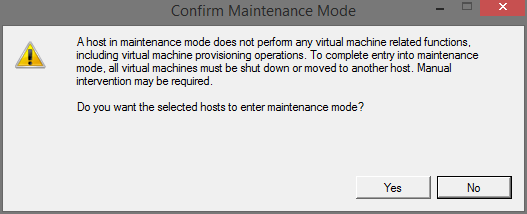

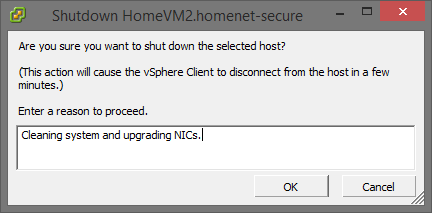

I will begin by properly shutting down the server via VMware vSphere Client. This software allows me to fully control the physical and virtual components of the system. To shut down the hardware, I must first power down all virtual machines and then place the server into “Maintenance Mode.”

Now that VMware is fully aware of my intentions of altering the system, it will not attempt to make any changes or perform scheduled maintenance on volumes in the storage array. This allows me to safely shut down the server, un-rack it, and bring it back for some work!

Step 2: Cleaning

Now that the system is shut down and sitting in front of me, I can remove the cover and begin cleaning. I've been told countless times that dust is a system's worst enemy. Ideally, a server could exist an a controlled environment with minimal dust, proper ventilation, and adequate temperatures. Unfortunately, that is not the case for this server and I feel obligated to clean it out every few months just to be sure that it will continue to run well for years. I used a Swiffer Duster to clean all of the components within the server. The shroud that directs the airflow to the hottest components (CPUs, RAM, PDUs) is removed by pulling upwards on the blue tabs and slowly popping each edge out from it's secured position.

With much less dust inside the system, I can now move onto the installation of an extra NIC!

Step 3: Installation

The two onboard Gigabit Ethernet adapters tend to get saturated when moving virtual machines across the network, performing network-wide backups, or doing too many things on too many machines at the exact same time. To alleviate this first-world-problem, I picked up a cheap Intel Dual-Gigabit PCIE x4 Card from eBay for roughly $30! Intel has great NICs that offload a lot of work from your system over to the NIC's onboard processor. Hopefully this little guy helps down the road with bandwidth performance. The installation of the card into the server is quite simple. To allow the use of PCI cards, most server manufacturers (Dell, in this case) use a PCI riser to turn the PCI slot sideways and allow a full card to fit into a slim 1U or 2U rack-mounted server.

The PCI Riser

The PCI-E NIC

Installing the Card

All Done!

Step 4: Configuration

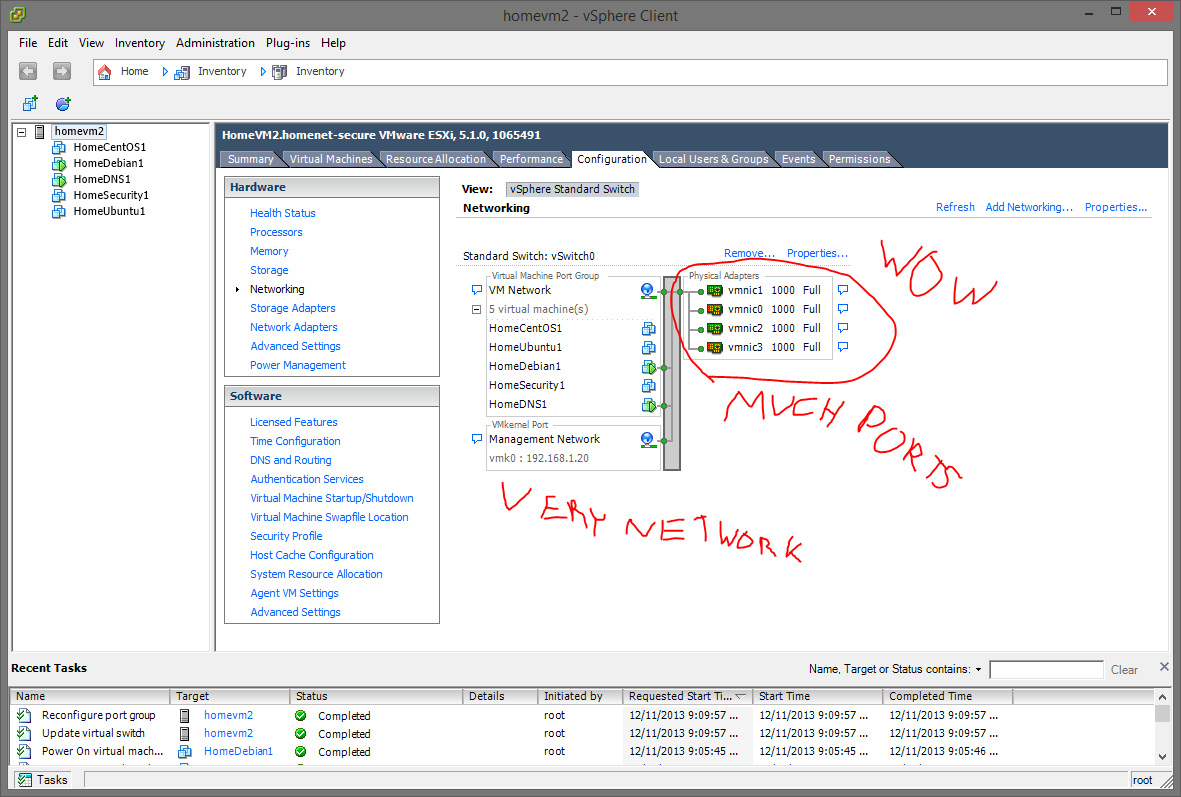

The server is now back in it's original environment. It's online and accessible via VMware vSphere Client. After logging in, I exit maintenance mode and decide to manually start the virtual machines. Here's how…

Now I can add the new NICs to the existing virtual switch!

Conclusion:

Working inside a server seems intimidating at first. Think back to your first time opening up a computer and having very little knowledge of what everything was. Aside from the obvious components like the hard drives, the memory sticks, and these PCI cards, the internals were quite unfamiliar. The motherboard spans the majority of the system and has a lot of dramatic differences when compared to a typical “ATX” motherboard. The power supplies (which were not present for any of the pictures) are modular and allow you to hot-swap one at a time even while the system is running. The redundancy features in these servers are really impressive. Another feature that was unfamiliar to me was the SAS module that controlled the I/O of the disk drives. It had some sort of backup battery on it and was a separate component from the motherboard. Through that, I created a RAID-5 array when I first configured this system. VMware uses this array as a virtual storage device which I then allocate specific amounts to new machines. I can even shrink or expand the logical disks if a situation ever calls for that.

I've slowly felt more comfortable working inside servers over the last few months. Manufacturers do a pretty good job of making them accessible, modular, easily upgraded, and powerful; all in this 1U device. Crazy.