User Tools

Sidebar

Table of Contents

PNC2 - Graph your pnc1 results

Submission requirements:

Only submit any further altered code that was undertaken in the pursuit of pnc2. If you’ve not changed any code, no need to submit anything old.

Definitely graphs, supporting data, + ANALYSIS (what realizations were had from visualizing the results, what hypotheses or statements can be made) on the pnc2 project page.

http://www.boutell.com/gd/manual2.0.33.html

https://libgd.github.io/manuals/2.1.1/index/Functions.html

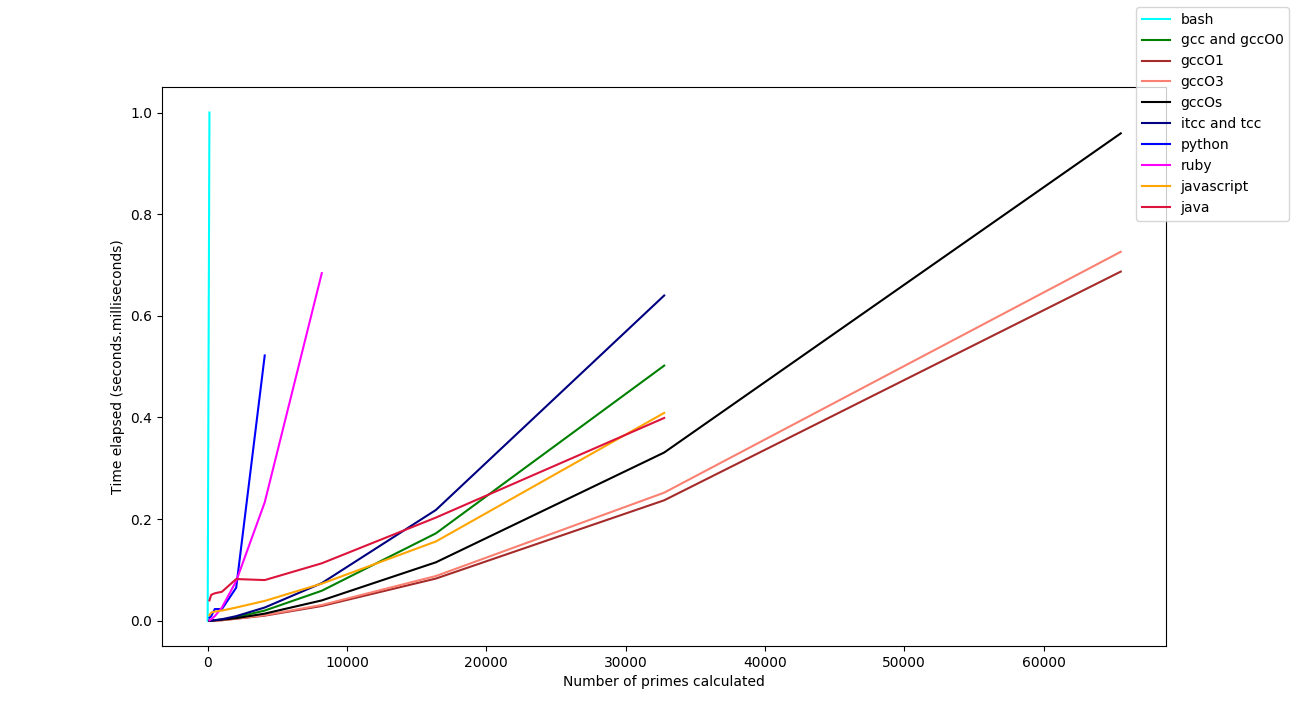

Andrei Bratkovski Pnc2 Data visualization (February 4th, 2018):

Pnc2 data recorded up until it flat-lines (right before it exceeds 1 second for next multiple of 2 in prime qty)

qty bs bs bs bs bs bs bs bs bs bs bs bs

bash gcc gccO0 gccO1 gccO3 gccOs itcc java js python ruby tcc

128 ----- 0.000 0.000 0.000 0.000 0.000 0.000 0.040 0.010 0.002 0.001 0.000

256 ----- 0.000 0.000 0.000 0.000 0.000 0.000 0.042 0.015 0.006 0.003 0.000

512 ----- 0.001 0.001 0.000 0.001 0.001 0.001 0.049 0.018 0.019 0.009 0.001

1024 ----- 0.002 0.002 0.001 0.001 0.002 0.003 0.058 0.019 0.057 0.026 0.003

2048 ----- 0.007 0.007 0.004 0.004 0.005 0.009 0.067 0.026 0.166 0.078 0.009

4096 ----- 0.020 0.020 0.010 0.011 0.014 0.026 0.079 0.038 0.494 0.234 0.026

8192 ----- 0.059 0.059 0.029 0.031 0.040 0.074 0.110 0.071 ----- 0.690 0.074

16384 ----- 0.172 0.172 0.083 0.088 0.115 0.218 0.214 0.159 ----- ----- 0.218

32768 ----- 0.503 0.502 0.237 0.252 0.331 0.640 0.376 0.395 ----- ----- 0.640

65536 ----- ----- ----- 0.687 0.726 0.959 ----- 0.883 1.046 ----- ----- -----

Analysis: From the above data, I can conclude that compiler optimizations and C are king. Due to being the lower level and strongly typed language, C is much more raw in it's computing than all of the abstract layers of scripting languages made out of C ( Python, Javascript, Ruby ). The difference is very apparent in the above graph. This brings me to the conclusion that if someone is looking to truly build a powerful and high-speed application, they should seek lower level languages. If readability and maintaining less demanding code is more important, than people should seek scripting languages. It is a battle of performance versus readability.

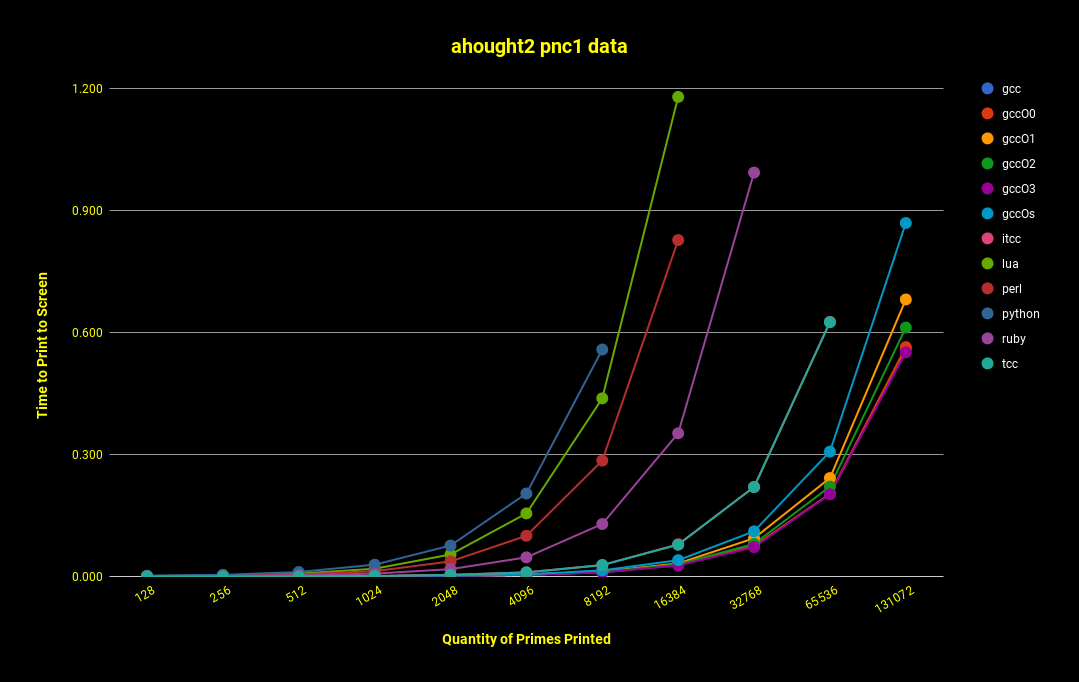

Aaron Houghtaling pnc2 Data Visualization (February 5th, 2018):

qty bs bs bs bs bs bs bs bs bs bs bs bs bs

bash gcc gccO0 gccO1 gccO2 gccO3 gccOs itcc lua perl python ruby tcc

128 ----- 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.001 0.001 0.002 0.000 0.000

256 ----- 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.003 0.002 0.004 0.001 0.000

512 ----- 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.007 0.005 0.011 0.003 0.000

1024 ----- 0.001 0.001 0.001 0.001 0.001 0.001 0.001 0.019 0.013 0.029 0.007 0.001

2048 ----- 0.002 0.002 0.002 0.002 0.001 0.002 0.004 0.054 0.037 0.076 0.018 0.004

4096 ----- 0.004 0.004 0.005 0.004 0.004 0.005 0.010 0.155 0.100 0.204 0.047 0.010

8192 ----- 0.010 0.011 0.012 0.011 0.010 0.015 0.028 0.438 0.285 0.558 0.129 0.028

16384 ----- 0.029 0.029 0.032 0.030 0.027 0.040 0.079 1.179 0.827 ----- 0.352 0.078

32768 ----- 0.074 0.075 0.094 0.080 0.072 0.111 0.220 ----- ----- ----- 0.993 0.220

65536 ----- 0.203 0.203 0.242 0.221 0.202 0.307 0.626 ----- ----- ----- ----- 0.625

131072 ----- 0.560 0.564 0.681 0.612 0.551 0.869 ----- ----- ----- ----- ----- -----

Analysis: Similarly to the rest of class I am sure, we realize that low-level languages are the fastest. I was very surprised at there even being such a thing as compiler optimizations, and that it could have such an effect as looking at gccO3(0.551 - gty=131072) vs tcc(0.625 - qty=65536), that means with only the compiler differences gccO3 printed double the amount of the tcc compiler in a faster time ! I also note that my bash version did not even make the scale. It took it longer than 2 seconds to print out even 128 primes. The difference between my bash and gccO3 is unfathomable.

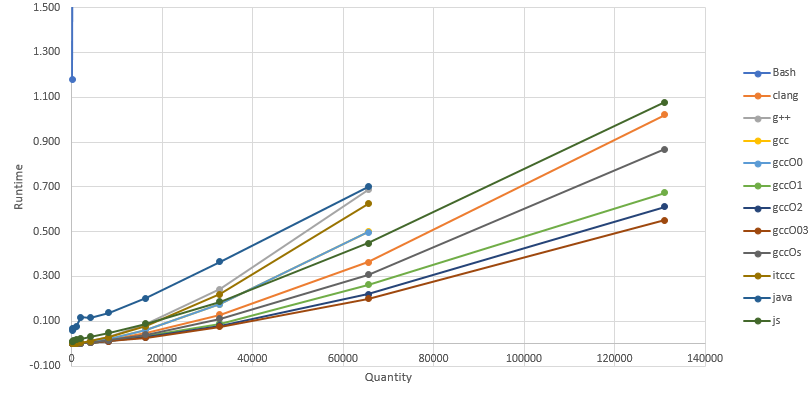

Brandon Strong Pnc2 Data visualization (February 5th, 2018):

qty

bash gcc gccO0 gccO1 gccO2 gccO3 gccOs java js lua python

=========================================================================

128 0.957 0.000 0.000 0.000 0.000 0.000 0.000 0.006 0.009 0.001 0.001

256 ----- 0.000 0.000 0.000 0.000 0.000 0.000 0.010 0.012 0.002 0.002

512 ----- 0.000 0.000 0.000 0.000 0.000 0.000 0.018 0.017 0.004 0.006

1024 ----- 0.001 0.001 0.001 0.001 0.001 0.001 0.033 0.020 0.012 0.017

2048 ----- 0.002 0.002 0.001 0.001 0.001 0.001 0.079 0.024 0.034 0.048

4096 ----- 0.004 0.004 0.004 0.004 0.004 0.004 0.168 0.032 0.095 0.127

8192 ----- 0.011 0.011 0.010 0.010 0.010 0.010 0.338 0.050 0.264 0.353

16384 ----- 0.028 0.028 0.026 0.026 0.026 0.027 0.688 0.094 0.718 1.046

32768 ----- 0.075 0.075 0.072 0.071 0.071 0.072 ----- 0.197 ----- -----

65536 ----- 0.205 0.205 0.200 0.196 0.196 0.200 ----- 0.459 ----- -----

131072 ----- 0.563 0.566 0.547 0.544 0.546 0.551 ----- 1.158 ----- -----

262144 ----- ----- ----- ----- ----- ----- ----- ----- ----- ----- -----

Analysis: Alright ya boi's lets go! So it seems as though the higher level languages are doing worse than the other lower level languages… Bash xD. So with that being said I was expecting the compiler optimizations to be better, but they are virtually the same, just minor changes in time (compiled with make files in each of the directories). So that's weird there must be a reason, but currently not sure why. Anyway there was a nice improvement on the bash implementation.

Bash Optimization

qty Bash(Optimized) Bash ================================================= 128 1.016418 5.4983 256 2.318623 15.3991 512 5.191048 43.7051 1024 11.714127 124.9187 2048 26.564731 ---- 4096 60.236809 ---- 8192 137.860763 ----

Analysis: How is it that much faster? So the change took place within the nested loop.So lets take a look at the original code.

while [ ${divisor} -lt $((maxDiv+2)) ]; do

check=$(echo "${primeNum} % ${divisor}" | bc)

if [ ${check} -eq 0 ] && [ ${divisor} != ${primeNum} ]

then

flag=1

break

fi

divisor=$((divisor+1))

done

So taking a look at the check variable you can see that its being piped to bc to find the remainder, however this can be done in the if statement, which shows crazy improvements.

while [ ${divisor} -lt $((maxDiv+2)) ]; do

if [ $(($primeNum%$divisor)) -eq 0 ] && [ ${divisor} != ${primeNum} ]

then

flag=1

break

fi

divisor=$((divisor+1))

done

Yes that is the only change so if you guys have that change to make… you're program will be moar betttterrrrr.

GCJ Vs Javac

Qty Gcj Javac ========================================================= 128 0.006 0.042 256 0.01 0.05 512 0.018 0.076 1024 0.033 0.095 2048 0.079 0.126 4096 0.168 0.168 8192 0.338 0.271 16384 0.688 0.431 ==========================================================

Analysis: Just stating that this is the same code for both, but one is compiled with java, and one is converted from java and turned into c code. It seems that in the beginning of the program GCJ does better, however in the long run the Javac preforms better.

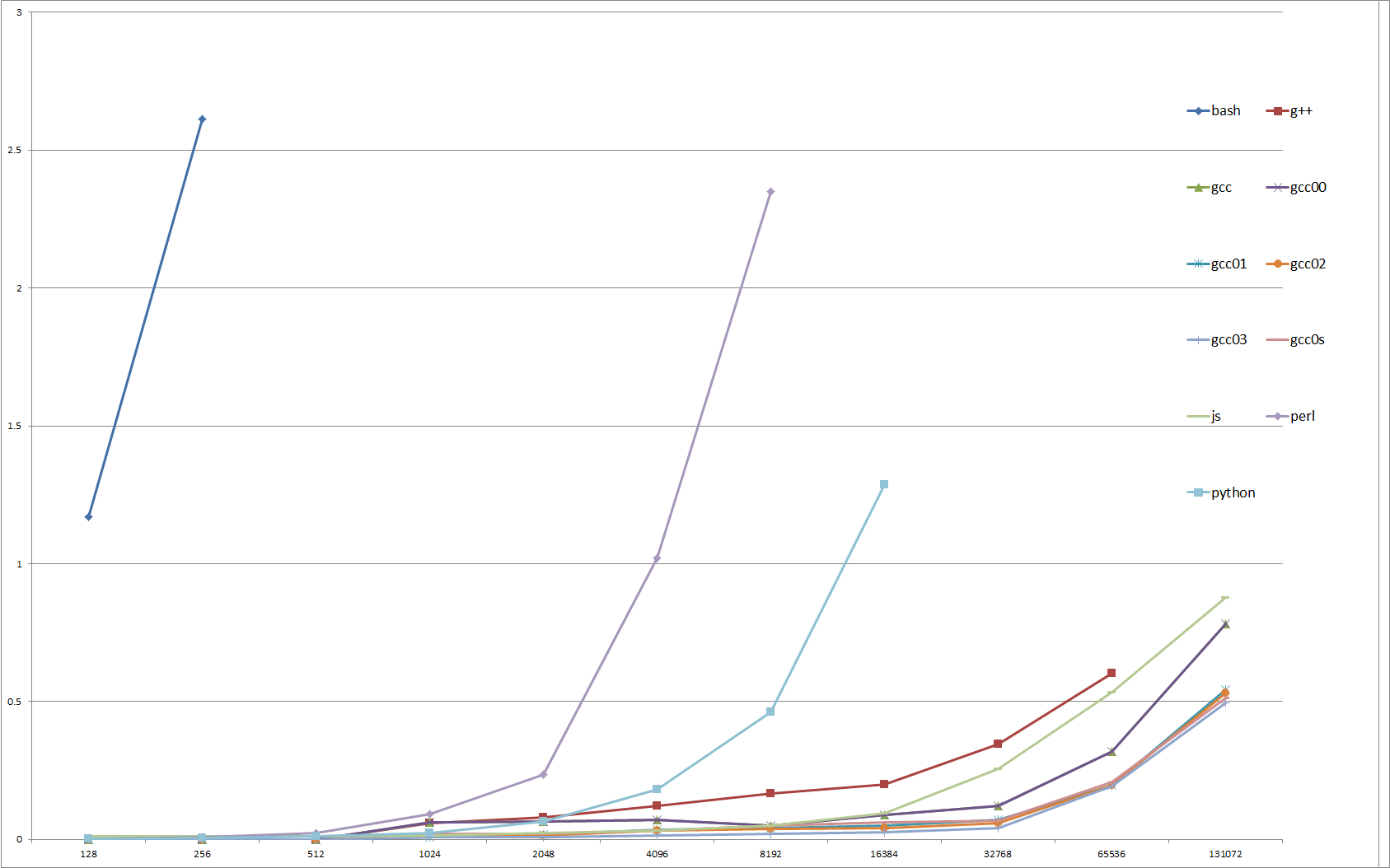

Matthew Chon pnc2 Data Visualization (February 6th, 2018):

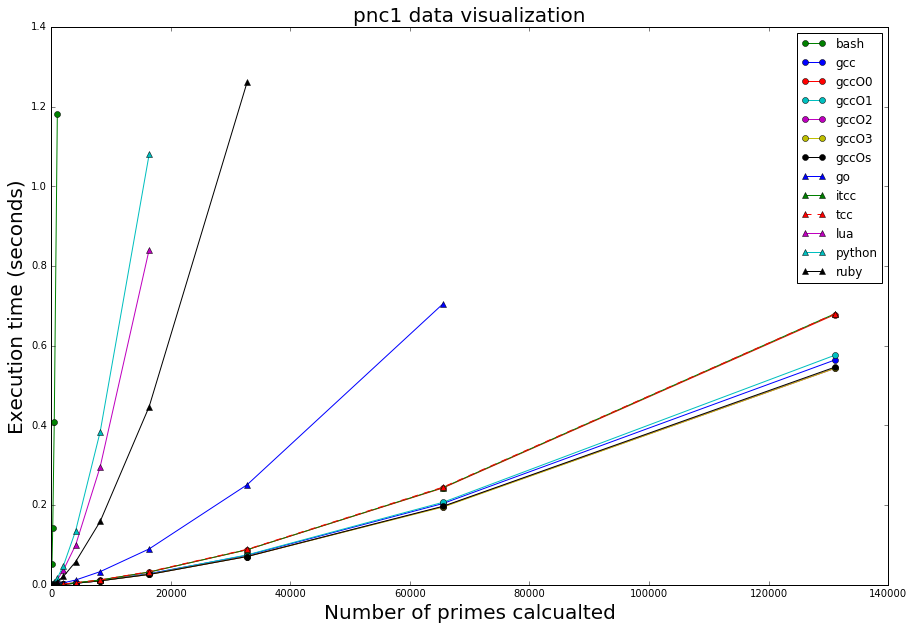

graph

qty bash clang g++ gcc gccO0 gccO1 gccO2 gccO3 gccOs itccc java js ======================================================================================================= 128 1.180 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.068 0.009 256 2.545 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.058 0.011 512 5.843 0.001 0.001 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.066 0.016 1024 13.298 0.002 0.002 0.001 0.001 0.001 0.001 0.001 0.001 0.001 0.075 0.018 2048 29.695 0.004 0.004 0.003 0.003 0.002 0.002 0.001 0.002 0.004 0.119 0.022 4096 ---- 0.006 0.012 0.008 0.008 0.005 0.004 0.004 0.005 0.010 0.115 0.030 8192 ---- 0.017 0.031 0.023 0.023 0.012 0.011 0.011 0.015 0.028 0.137 0.048 16384 ---- 0.047 0.087 0.063 0.063 0.032 0.029 0.027 0.040 0.078 0.203 0.089 32768 ---- 0.130 0.243 0.176 0.176 0.088 0.080 0.076 0.111 0.220 0.366 0.187 65536 ---- 0.364 0.686 0.499 0.497 0.262 0.221 0.200 0.307 0.624 0.701 0.448 131072 ---- 1.023 ---- ---- ---- 0.674 0.612 0.551 0.867 ---- ---- 1.077 262144 ---- ---- ---- ---- ---- ---- ---- ---- ---- ---- ---- ----

Analysis: As shown in the graph and chart, the C implemented pncs destroyed the other languages in runtime. Compiling with gccO3 gave the best performance in terms of runtime performance. It can be concluded that the lower-level language outperformed the higher-level languages in terms of runtime and qty.

Benjamin Schultes PNC1:

All Scripts

As expected, the C programs outperformed all other scripts. There isn't a lot of data shown on the chart because many of the C programs had very similar run times.

qty bash gcc gccO0 gccO1 gccO2 gccO3 gccOs go lua python3 ruby 64 1.018 0 0 0 0 0 0 0 0 0.001 0 128 0 0 0 0 0 0 0 0.001 0.002 0.001 256 0 0 0 0 0 0 0 0.002 0.007 0.002 512 0 0 0 0 0 0 0.001 0.006 0.019 0.007 1024 0.001 0.001 0.001 0.001 0.001 0.001 0.002 0.018 0.056 0.02 2048 0.003 0.003 0.002 0.002 0.002 0.003 0.005 0.044 0.155 0.056 4096 0.008 0.008 0.007 0.007 0.007 0.008 0.012 0.123 0.451 0.162 8192 0.023 0.023 0.019 0.019 0.019 0.023 0.033 0.36 0.454 16384 0.065 0.065 0.052 0.052 0.055 0.065 0.091 1.003 1.312 32768 0.183 0.183 0.145 0.145 0.145 0.182 0.254 65536 0.517 0.517 0.409 0.408 0.408 0.516 0.714 131072 1.156 1.154 1.154

Christian Cattell pnc2

qty

bash g++ gcc gccO0 gccO1 gccO2 gccO3 gccOs js perl python

================================================================================================

128 1.169 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.010 0.003 0.001

256 2.613 0.000 0.000 0.000 0.000 0.000 0.000 0.010 0.011 0.007 0.004

512 5.944 0.000 0.051 0.051 0.000 0.000 0.000 0.012 0.012 0.023 0.011

1024 ----- 0.059 0.061 0.061 0.008 0.016 0.008 0.021 0.014 0.092 0.023

2048 ----- 0.081 0.065 0.065 0.017 0.014 0.009 0.021 0.024 0.235 0.065

4096 ----- 0.121 0.071 0.071 0.034 0.031 0.013 0.032 0.032 1.020 0.182

8192 ----- 0.167 0.050 0.050 0.041 0.039 0.019 0.049 0.050 2.351 0.462

16384 ----- 0.201 0.089 0.089 0.049 0.041 0.027 0.061 0.094 ----- 1.287

32768 ----- 0.347 0.122 0.122 0.071 0.059 0.041 0.069 0.256 ----- 3.657

65536 ----- 0.602 0.319 0.319 0.196 0.197 0.193 0.209 0.534 ----- -----

131072 ----- ----- 0.783 0.783 0.544 0.531 0.496 0.512 0.879 ----- -----

Thoughts

Bash is really, really bad actually. Makes you wonder just how much is really going on when you run it. I expect js to run the worst of the bunch (minus bash) so that surprised me. When making the C++ program, I somewhat tried to make it slow to see what kind of performance it would get so I am somewhat surprised by the results there. Seems like the C++ program could probably get pretty efficient when handled correctly.

As for writing everything, it was actually not as bad as I thought it would be. Core concepts translate really well to other languages. It is interesting to see the differences in the languages, but they are seemingly just syntactical. This is just referring to the basic stuff like loops and conditionals etc. Probably start to see larger differences as you get to more advanced parts of a language.

Kris Beykirch (kbeykirc)

Reflections

The first thing that has to be said is that bash is simply the worst: the lowest number of calculations that didn’t time out, and the bash curve is far, far to the left of everything else. Interestingly, next in line is python, the same language I used to create this graph. I didn’t have a lot of imports in my python code (no square root function, thanks to using the approximated square root trick instead), but if I were using it for something sophisticated like graphing with the matplotlib library, and had to do a lot of that in a program, I can imagine that it would be rather slow. I remember using python for heavy calculations back in my physics days, and waiting forever for something to execute (that being said, the scipy library that I used to do those calculations in the first place made it an invaluable tool for exactly what I used it for, even if it took a while).

Next best after python is lua, and then ruby, which are all in a roughly comparable group. Go improves quite a bit timewise, and outstripping all are the C programs. itcc and tcc perform ever so slightly worse than the gcc variants, but compared to the distance between go and itcc/tcc, the distance between itcc/tcc and gcc are pretty minimal.

General trend seems to be that the higher the language level, the worse the performance is. This makes sense, since there’s more overhead, and the language isn’t necessarily well designed for heavy computation (bash is certainly not made for this, a warning that I saw on a bash shell scripting guide and completely ignored for the purposes of this project).

The trade-off, though, is that some of the higher-level languages looked far nicer than C did (bash being the exception), with the programs looking cleaner and probably more self-explanatory to somebody new to coding. They were also shorter… most of my C programs were around 90 to 100 lines long, most of the other programs a mere 50 to 60 lines. It’s not a huge difference here, but for longer programs the extra space spent on writing functions and generally doing things the “hard” way in C (not to mention the curly braces) could certainly add up. But when pnc1 performance is concerned, C is certainly much better than all of the other languages I used.

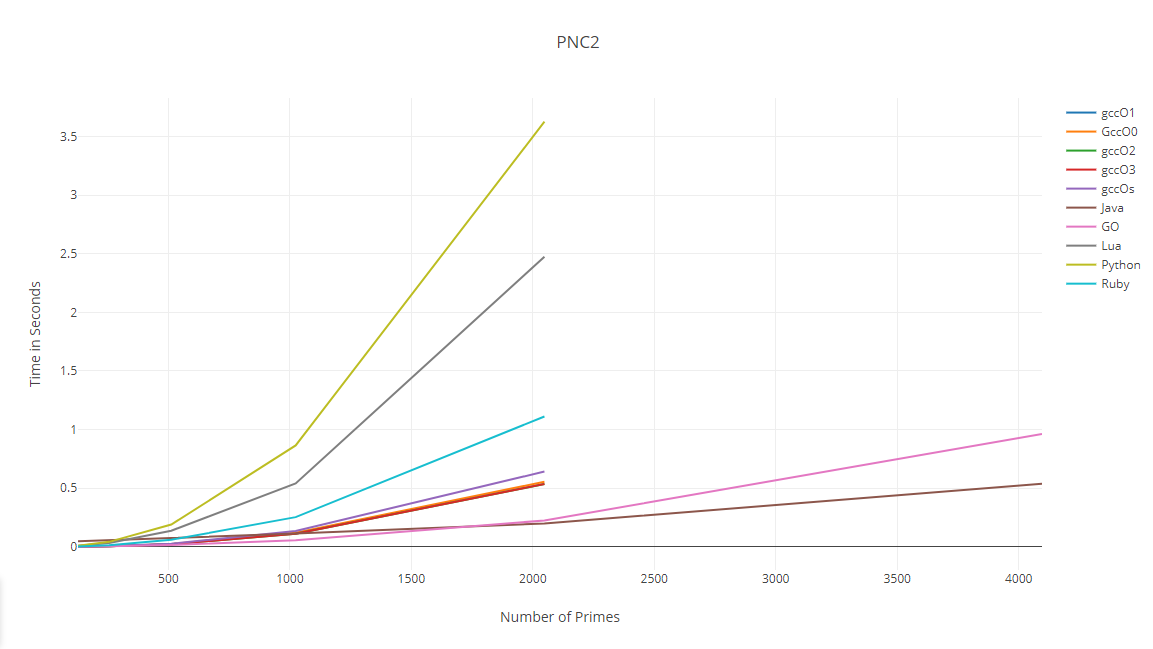

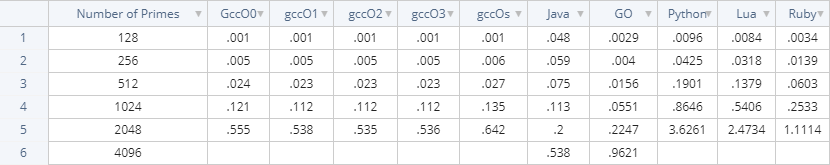

Kevin Todd (ktodd3)

For PNC1 I ended up using PrimeregB instead which gave some different results compared to the others who used Primeregbs

Thoughts:

I was surprised by the results of my testing as Java and GO seemed to beat out the others, I expected the C++ to be the most efficient as it is a higher level language. Python was the least efficient which in hind sight isn't very surprising at it was rather easy to use would could mean it's a lower level language but it being 'easy' doesn't guarantee that it's a low level language. On the note of why C++ lost could be to a bunch of different factors; one reason it lost out may be due to how I coded the program in the other languages. I may have been able to unintentionally make the other code slightly more efficient while my original was lacking, however I don't think is the right answer as I tried to keep the code very similar and didn't have too much trouble to do so. Another idea as to why C lost out might be attributed to calculating, I used Primeregb which only breaks when it finds out it's not a prime, it does not use the square root trick. Maybe C++ is much more efficient at using the square-root in calculations and that is why when used it shines. Also the number of primes I reached were pretty low compared to other student's so C++ also didn't get a chance to show what it can do in longer term calculations.